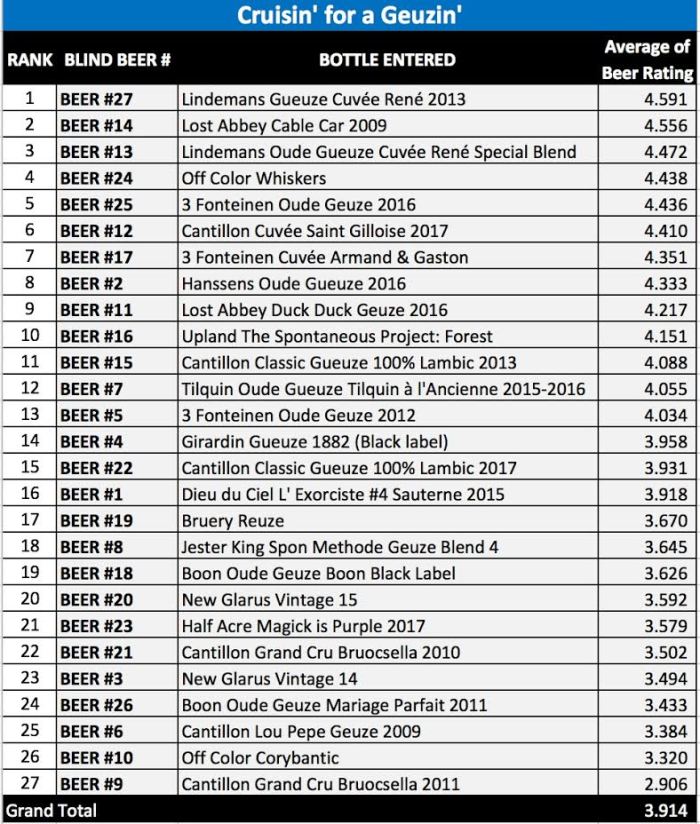

Last Saturday we gathered 12 tasters together to do our groups first blind bottle share.

The theme we decided on and prepared for several weeks was a Fruitless Geuze/Lambic/American Wild Ale. We collectively amassed 27 bottles for the event. The rules were pretty straight forward.

- Should be geuze, lambic or American Wild Ale, preference to non-kettle sours

- Avoid saisons, flanders, oud bruins, goses, and berliners

- No fruit or adjuncts aside from the spontaneous or purposely added bugs

- Each submission should be at least 750ml. You may choose to submit 2x 375s of the same beer instead

In the end a $13 shelfie, Cuvee Rene with 4 years of age, won it. Old Cable Car also lived up to the hype.

Lindeman’s Cuvee Rene Special Blend, long appreciated among lambic friends that shared bottles with me years ago finished 3rd and Off Color Whiskers somehow found its way into 4th.

Biggest surprise was Lou Pepe Gueuze ’09 sticker finishing 25th out of 27 bottles.

I think it’s very exciting that most shelf lambic in the blind tasting landed in the middle of the pack (exception being Boon Mariage Parfait.) It shows you don’t necessarily need to endure a long line up at Jester King, or trade for the green bottled flavor of the week to get great spontaneous beer. The sheer fact that so many Loons and Drie finished in the top 1/3 of the field does reinforce that some beers are worth the effort to obtain

Facts and Observations

- Total corks that hit the ceiling: 3

- All bottles were uncorked, decanted into pitchers and poured by a non-rating member of the group

- Still lambic did not fair well. I would have liked to see if 3F Doesjel would have done any better

- Upland Forest finishing in the top 10 was a big surprise, because isn’t shitting on Upland still in?

- People have been fairly down on DDG lately, but it still showed up strong

- All tasters did not necesarily follow the same method for scoring. While most tickers scored as they would on Untappd, at least one taster scored 0-5 based on its strength against the field. This was something we realized halfway through. For consistency, we decided to continue scoring the same way for the rest of the event.

You really really need to normalize the ratings and average them again. It would give a lot more meaning to the data. If you are willing to send me the raw data I would do it for you. I might even include some other metrics that give insight into the ratings. This is incredibly interesting to me so I would be really interested in seeing the normalized results.

LikeLike

Excellent article. I’m facing some of these issues as well..

LikeLike